How AI Learns from Incident Data

When incidents happen, they leave behind valuable data that AI can analyze to prevent future issues. However, most companies struggle to make use of this information because it’s scattered across tools like Slack, Jira, AWS logs, and monitoring dashboards. AI systems can bridge this gap by learning from past incidents, identifying patterns, and suggesting solutions faster than manual processes.

Key Takeaways:

- Data Challenges: Incident data is often fragmented, unlabeled, and quickly outdated as systems evolve.

- AI Solutions:

- Centralizes data from multiple sources (logs, Slack, Jira).

- Uses machine learning to find patterns and detect anomalies.

- Learns from human feedback to improve accuracy.

- Benefits:

- Cuts incident resolution time by up to 5x.

- Reduces downtime costs, which can average $4,537 per minute.

- Allows small teams to manage complex systems without adding staff.

AI tools, like those used by companies such as Zalando and Microsoft, transform incident management by automating analysis, triage, and response workflows. This reduces the burden on engineers and improves efficiency, making it easier to focus on innovation instead of firefighting.

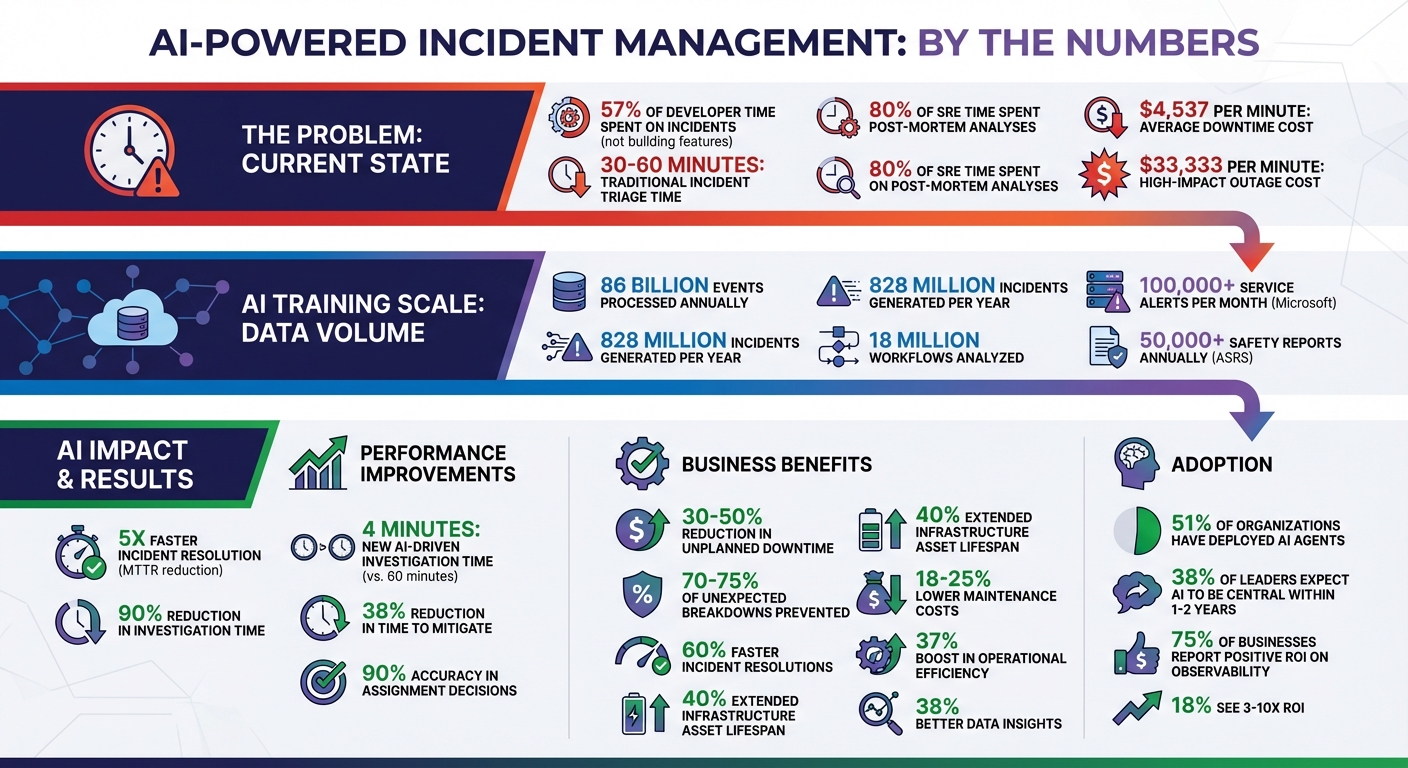

AI-Powered Incident Management: Key Statistics and Benefits

AIOps for Incident Management: ML & Correlation Explained

Common Problems When Using Incident Data for AI Learning

For AI to effectively learn from incidents, the data it relies on must be clean, well-organized, and accessible. However, there are three major hurdles: fragmented information, unlabeled records, and models losing accuracy as environments evolve.

Data Scattered Across Multiple Systems

Incident data often lives in multiple places: AWS CloudWatch, Datadog dashboards, Slack conversations, Jira tickets, Zoom recordings – you name it. This decentralization creates silos and inefficiencies, making it difficult to capture critical insights during an incident. Worse, some of the most useful information, like details from live discussions, can disappear entirely, leaving AI systems without essential context for future learning.

"Models are only as good as the data they can access – including the institutional knowledge that’s often most valuable and the transient insights that vanish during incidents." – Julia Nasser, PagerDuty

Modern incidents are complex, involving multiple teams, tools, and timelines. This makes it essential to centralize data collection. While many AI tools are designed to work with standardized logs like those from AWS or Kubernetes, root causes often hide in less conventional places, such as custom telemetry data or unique business logic. To address this, teams can use a Temporal Knowledge Graph to map relationships across services, infrastructure, and human inputs like resolution notes. Retrieval-augmented generation (RAG) can also help by indexing unstructured data – like runbooks, GitHub issues, or internal wikis – into a unified training dataset. Without this level of centralization, AI training remains incomplete. And even if the data is centralized, inconsistent labeling can still cause problems.

Missing Labels on Incident Data

Supervised learning thrives on accurately labeled data. Without proper labels, AI models struggle to identify patterns or produce reliable outputs. Unfortunately, labeling data is often more difficult and expensive than collecting it, typically requiring manual input from experts.

"Accurate data labeling ensures better quality assurance within machine learning algorithms, allowing the model to train and yield the expected output. Otherwise, as the old saying goes, ‘garbage in, garbage out.’" – IBM

Manual labeling also introduces the risk of errors, which can degrade data quality and, by extension, model performance. To make this process more efficient, teams can use active learning, where machine learning algorithms flag the most valuable unlabeled data for human review. Programmatic labeling can handle large datasets, while keeping a human in the loop ensures quality control on cases where the algorithm lacks confidence. Properly labeled, centralized data is critical for AI systems to adapt to ever-changing environments. But even with good labels, dynamic systems can still throw models off course.

Model Accuracy Degradation in Changing Environments

AI models often lose accuracy when infrastructure or environments change. This happens because models tend to have a narrow view of the data, focusing on just one stage of the software development lifecycle. On top of that, there’s often a disconnect between machine-generated alerts and human-written incident narratives – even when they describe the same problem. Without capturing both institutional knowledge and fleeting insights, models can miss key steps for resolving issues.

Take Microsoft Azure’s Core Insights Team as an example. In January 2025, they introduced the "Triangle System", a multi-agent orchestrator for incident triage. This system used Local Triage agents trained on historical incidents and Troubleshooting Guides. By early 2025, six teams were using it, with one team reporting a 38% reduction in Time to Mitigate and a 90% accuracy rate in assignment decisions. To maintain this level of performance, they relied on continuous feedback loops, which allowed models to learn from updated runbooks and human-approved solutions. Additionally, focusing on log-template similarity instead of plain text analysis proved more effective for identifying related incidents, as logs reflect the actual symptoms of system states.

These challenges – scattered data, missing labels, and evolving environments – highlight the complexities of using incident data for AI learning. Addressing them requires thoughtful strategies and tools to ensure AI systems remain accurate and reliable.

How AI Extracts Knowledge from Incident Data

After overcoming the hurdles of scattered data and incomplete labels, the next step is figuring out how AI processes incident information. This process revolves around three main activities: gathering data from multiple sources, spotting patterns through statistical methods, and refining predictions with human input.

Collecting Data from Logs, Traces, and Configuration Files

AI systems can process millions of log lines every minute from microservices, cloud platforms, and Kubernetes clusters. To make sense of this overwhelming flow, these systems enrich logs with metadata like GeoIP, user IDs, and service tags. This added context instantly helps identify infrastructure ownership and assess the impact of issues.

To streamline analysis, AI converts raw logs into "template-ids" that form a "log signature." This allows for quick comparisons of current events with similar past incidents. For instance, if a database connection error occurs, the system can immediately match it to previous occurrences with the same signature. Typically, platforms isolate logs from a specific time frame – such as five minutes before and after an outage – to separate problematic logs from normal system activity.

Using Natural Language Processing (NLP), AI translates anomalies into plain language, making them easier for humans to understand. Some systems go even further by creating knowledge graphs that map out relationships and dependencies between services, infrastructure, and human inputs like resolution notes. These graphs enhance AI’s ability to suggest the most relevant solutions by understanding how different components interact.

"The payoff isn’t just reduced downtime, it’s the transformation of incident response from a source of organizational stress into a competitive advantage." – Elastic

For context, PagerDuty reported processing 86 billion events and generating 828 million incidents in a single year to train its AI models. This immense scale of data collection enables AI to learn from real-world scenarios rather than theoretical models. Once the data is structured and enriched, the next step is identifying patterns and anomalies.

Identifying Patterns and Detecting Anomalies

After normalizing and enriching logs, AI dives into analyzing them to uncover hidden patterns and anomalies. Through unsupervised machine learning, AI profiles typical log behavior without relying on manual rules. This adaptability allows the system to respond to changing traffic patterns and flag unusual spikes or new log categories automatically. Instead of using rigid thresholds, modern platforms apply statistical techniques to identify outliers, predicting expected event counts for specific time intervals and highlighting deviations.

Advanced methods like Differential Diagnosis (DDx), Leaf Errors tracing, and intermetric anomaly detection help pinpoint issues with precision.

Traditionally, incident triage could take 30 to 60 minutes, but AI-powered systems can deliver detailed analyses within seconds. This speed is thanks to algorithms that detect relationships across seemingly unrelated systems – patterns that might go unnoticed in manual reviews. Interestingly, 80% of Site Reliability Engineers (SREs) spend their time on post-mortem analyses, often due to insufficient information during incidents.

The industry is moving beyond simple chatbots to autonomous AI agents capable of executing workflows, making decisions, and completing tasks based on predefined playbooks. Currently, 51% of organizations have deployed such AI agents, and 38% of leaders expect them to become central to operations within the next one to two years.

Using Human Feedback to Improve AI Models

AI systems thrive on continuous learning, much of which comes from human feedback. Quick-feedback tools allow users to rate AI outputs, helping refine future predictions.

These systems also record human resolution steps, incorporating them into updated runbooks to enhance recommendations. Monitoring override rates – how often analysts adjust AI predictions – helps identify model drift and fine-tune inputs or prompts. Some platforms use similarity scores (e.g., 30%) to compare current incidents with past cases, suggesting priorities and assignees based on previous outcomes.

"The SRE Agent remembers what actually happens in your environment – changes, dependencies, past incidents, conversation history, and most critically, the steps human responders took to diagnose issues and restore service." – Julia Nasser, PagerDuty

AI tools also draft post-incident reviews, which analysts then refine. These edits are fed back into the system, improving its accuracy for future documentation. This feedback loop helps turn past mistakes into proactive measures. Organizations using generative AI in operations have reported a 37% boost in efficiency and 38% better data insights. Despite these advancements, human oversight remains critical – analysts are ultimately responsible for interpreting data and taking action, with AI serving as a powerful assistant rather than an independent decision-maker.

3 AI Learning Methods for Incident Analysis

Once AI systems gather and organize incident data, they use specific learning methods to turn that raw information into actionable insights. These methods include supervised learning for addressing known issues, unsupervised learning to uncover hidden relationships, and reinforcement learning to refine responses through iterative feedback.

Supervised Learning from Past Resolved Incidents

Supervised learning works with labeled datasets, where each input – such as logs, metrics, or traces – is paired with a specific outcome like "database failure" or "network latency". By analyzing these patterns, the system learns to map incident traits to their resolutions. Techniques like Naive Bayes or k-Nearest Neighbor (KNN) are often used to classify and predict outcomes.

For example, PagerDuty’s AI, trained on over 828 million incidents and 18 million workflows, recommends solutions that can cut investigation times by up to 90%. Similarly, in April 2025, Grammarly‘s security engineering team adopted an AI-driven workflow to handle cloud alerts. By automatically analyzing identity logs and configuration histories, they reduced investigation times from nearly an hour to just four minutes.

"Supervised learning algorithm uses a labeled dataset where every input is associated with a corresponding output label. This mapping makes it easier for the algorithm to make predictions or generate new, unseen data."

– Teradata

The standout advantage of supervised learning is its consistency. Unlike manual analysis, which can vary based on an engineer’s experience or stress level, AI offers a thorough and uniform approach to every incident.

While supervised learning tackles known problems, unsupervised methods shine when it comes to revealing hidden patterns in unlabeled data.

Unsupervised Learning for Finding Hidden Patterns

Unsupervised learning doesn’t rely on labeled data. Instead, it identifies structures and relationships within raw, unlabeled datasets without human guidance. For instance, K-means clustering groups data by shared characteristics, helping to spot recurring incident types or related alerts that might otherwise seem unrelated.

Other techniques include association rule mining, which uncovers "if-then" relationships – like finding that a specific configuration change often leads to an API failure. Dimensionality reduction methods like Principal Component Analysis (PCA) filter out noise in high-volume telemetry, allowing the model to focus on key variables.

This approach is particularly useful for detecting anomalies in cloud environments. By learning what "normal" looks like, unsupervised models can flag subtle deviations, such as slow probing attacks, gradual privilege escalations, or minor configuration changes that might evade traditional alerts.

"Unsupervised learning algorithms are better suited for more complex processing tasks, such as organizing large datasets into clusters. They are useful for identifying previously undetected patterns in data."

– Google Cloud

A practical example is the Aviation Safety Reporting System (ASRS), which processes over 50,000 safety reports annually. Without AI, identifying trends in such a massive dataset would be nearly impossible.

While unsupervised learning focuses on discovery, reinforcement learning takes it a step further by optimizing response strategies.

Reinforcement Learning for Testing Response Strategies

Reinforcement learning (RL) uses feedback loops to refine response strategies. AI agents learn by trial and error, continuously improving their actions based on outcomes from real-world environments. This allows the system to adapt to an organization’s specific operational needs without constant reprogramming.

The process typically operates in tiers. In Tier 1, the AI autonomously resolves straightforward issues. In Tier 2, it suggests multiple potential solutions for human approval, learning from those decisions to improve future predictions and actions. Human responses and incident outcomes are systematically fed back into the model for ongoing refinement.

In May 2025, Microsoft deployed autonomous agents to manage over 100,000 service alerts per month across platforms like Azure and Xbox. These agents translated Troubleshooting Guides (TSGs) into executable workflows, significantly improving TSG quality and reducing mitigation times.

"AI agents… continuously learn from operational data. With their ability to apply feedback information into their model, they improve over time without explicit reprogramming, learning which response strategies work best for specific incident types."

– PagerDuty

Today, 88% of business leaders expect AI agents to play a key role in their operations soon. The focus is shifting from basic chatbots to autonomous agents capable of executing workflows and making decisions based on current and historical data.

With supervised, unsupervised, and reinforcement learning, AI transforms incident data into proactive solutions, addressing challenges like fragmented data, missing labels, and ever-changing environments.

sbb-itb-f9e5962

Measurable Benefits of AI-Powered Incident Learning

Faster Incident Resolution Times

AI-powered systems take the guesswork out of incident management. Instead of manually sifting through logs and metrics, these systems deliver automated, in-depth analysis within seconds. They can pinpoint the exact service causing an issue – like identifying the first instance of latency in a request path.

The results are impressive. AI-powered Site Reliability Engineering (SRE) tools can slash Mean Time to Resolution (MTTR) by up to 5x. This is a big deal, especially when you consider that developers currently spend about 57% of their time tackling incidents instead of focusing on building new features.

"The agent becomes the first responder, autonomously managing alerts, performing initial triage, conducting root cause analysis, and even executing remediation workflows."

– Resolve.ai

How does it work? These systems enrich alerts with critical context – like recent code changes, deployment histories, or infrastructure events – before even notifying a human responder. They also group related alerts into a single incident and suppress transient alerts that resolve on their own, reducing alert fatigue. The result? Faster resolutions and significant savings, especially when it comes to downtime costs.

Lower Downtime and Infrastructure Costs

Downtime is costly – on average, it runs $4,537 per minute, with some high-impact outages costing as much as $33,333 per minute. AI-driven incident learning shifts the focus from reactive firefighting to proactive prevention, helping organizations cut these expenses dramatically.

For instance, businesses using AI for predictive maintenance see a 30-50% drop in unplanned downtime. Early detection of issues prevents 70-75% of unexpected breakdowns, and when incidents do occur, AI tools enable 60% faster resolutions.

Real-world examples highlight these benefits. In 2024, a Fortune 500 manufacturer and Shell saved millions by addressing potential downtime before it became a problem. BMW leveraged AI in its manufacturing plants to avoid over 500 minutes of annual production disruptions.

AI also streamlines traditionally time-consuming processes like triage and root cause analysis, which typically consume 38% of responder time. With AI, maintenance costs drop by 18-25%, and planned maintenance requires 3.2x fewer labor hours than emergency repairs.

"Full-stack observability can halve the cost of a major outage while speeding up detection and resolution, freeing up teams to focus on innovation that meets business objectives."

– Ashan Willy, CEO, New Relic

Beyond cost savings, AI extends the lifespan of infrastructure assets by around 40%, turning expensive capital investments into long-term gains. It’s no wonder 75% of businesses report a positive return on observability investments, with 18% seeing a 3-10x ROI.

Infrastructure Management for Small Teams

AI isn’t just for large enterprises – it’s a game-changer for smaller teams too. For teams of 10-50 people, AI automates complex processes and handles the overwhelming volume of data generated by modern microservices. This means 24/7 intelligent response without the need to hire additional staff. Currently, engineers spend 33% of their time handling disruptions instead of innovating, but AI gives them those hours back.

A three-tier automation framework helps small teams prioritize effectively:

- Tier 1: Fully automated tasks, like scaling databases or renewing SSL certificates.

- Tier 2: AI-led triage for partially understood issues, with solutions suggested for human approval.

- Tier 3: Human-led investigations for novel, complex problems.

This setup eliminates the need for specialized Level 2 and Level 3 operations staff while maintaining high SLA compliance.

Adoption is growing fast – 51% of companies have already deployed AI agents, and 38% of leaders expect AI to become a core part of their operations within the next 1-2 years. The benefits are clear: organizations using AI report a 37% boost in operational efficiency, 38% better data insights, 36% improved customer experiences, and 33% enhanced collaboration.

AI agents also "remember" past incidents, tracking change events and the steps taken to resolve them. This turns the unwritten knowledge of senior engineers into accessible, actionable data. These agents work tirelessly, spotting subtle connections across systems that humans might overlook.

"AI agents won’t replace humans – they will augment human capabilities and allow operations professionals to move up the value chain."

– PagerDuty

For small teams, consistency is key. Unlike manual processes that vary depending on an engineer’s experience or stress level, AI ensures everyone starts with the same context. This reduces the length of incidents, minimizes the number of responders needed, and eases the mental load on on-call staff.

TechVZero‘s Approach to AI-Powered Incident Learning

TechVZero takes AI’s potential and turns it into actionable solutions, focusing on improving incident resolution through smart infrastructure changes.

Cutting Costs Through Smarter Infrastructure

TechVZero’s AI-powered incident learning isn’t just about tech – it’s about results. For instance, they helped a client save $333,000 in a single month while stopping a DDoS attack. This approach tackles two big challenges at once: reducing costs and enhancing security, all without adding extra staff.

The process starts by identifying where money is being wasted in infrastructure spending. Instead of piling on expensive monitoring tools or dealing with inflated cloud bills, TechVZero digs deep to find the real issues. Whether it’s over-provisioned resources, inefficient workflows, or poorly designed architectures, their AI tools analyze logs and metrics to uncover the root causes faster than traditional methods.

Bare Metal Kubernetes for Efficiency

Switching to bare metal Kubernetes is a game changer, cutting out the virtualization overhead that drives up cloud costs. These migrations typically reduce infrastructure spending by 40-60%, all while meeting strict compliance standards like SOC2, HIPAA, and ISO certifications.

This setup is particularly effective for AI-driven incident learning. Bare metal infrastructure handles massive amounts of operational data more efficiently, which is crucial when systems are processing thousands of log entries per second to spot anomalies. Without the added layers of virtualization, the system detects patterns faster and responds more quickly. This streamlined infrastructure becomes a critical part of a feedback loop, enabling faster learning and better anomaly detection.

Results-Driven Pricing That Puts Clients First

Once the infrastructure is optimized, TechVZero ensures clients only pay for tangible results. Their pricing model is simple: clients pay 25% of the savings achieved over one year. If the promised cost reductions don’t happen, clients owe nothing.

This performance-based model eliminates the usual risks of hiring consultants who charge regardless of outcomes. For startups or smaller teams (10-50 people), it means they can optimize their infrastructure without needing to hire specialized staff or gamble on untested solutions. Over time, the AI systems get smarter with each incident, creating a cycle of continuous improvement. What was once a fixed operational cost now becomes a strategic advantage, driving efficiency and savings.

Conclusion

AI has reshaped how we handle incident data, turning raw logs, traces, and configuration files into structured insights. By spotting patterns and improving through feedback loops, AI enables small teams managing complex infrastructure to spend less time on crises and more time driving innovation.

The impact is clear: developers currently dedicate 57% of their time to incident response tasks. AI systems, however, can slash triage times from 30–60 minutes down to mere seconds, improving operational efficiency by 37%.

"AI agents won’t replace humans – they will augment human capabilities and allow operations professionals to move up the value chain." – PagerDuty

TechVZero builds on these advancements by delivering actionable results. Instead of piling on more monitoring tools or accepting ballooning cloud costs, it focuses on infrastructure changes that deliver measurable outcomes. Their performance-based pricing model – claiming 25% of savings for one year, with no fees if savings targets aren’t met – aligns cost optimization with business goals. For teams of 10–50 people, this approach eliminates the need for extra infrastructure specialists while ensuring reliability and security.

Shifting from reactive firefighting to proactive infrastructure management creates systems that continuously learn and improve. This evolution not only boosts resilience but also transforms operations from a cost burden into a strategic advantage.

FAQs

How does AI bring together incident data from multiple sources?

AI brings together incident data by collecting logs, metrics, alerts, and various signals from multiple systems. It then organizes and connects this information into a unified view, eliminating silos and making analysis more streamlined.

By centralizing this data, AI enhances visibility and enables quicker, automated insights. This approach helps organizations make faster decisions and respond to incidents with greater efficiency.

What challenges does AI face when learning from incident data?

AI systems encounter several hurdles when working with incident data. A key issue is the quality of the data. Incident reports are often messy, incomplete, or missing important details, which makes it tough for AI to draw accurate conclusions. This can lead to mistakes like "hallucinations", where the AI creates explanations that sound convincing but are incorrect, or misattributions, where it identifies the wrong root cause. To address these issues, human oversight is crucial – experts can validate results, fix errors, and contribute the domain knowledge that AI simply doesn’t have.

Another obstacle involves how the AI is prompted and the type of input it receives. Vague, unstructured prompts like "Investigate this" often lead to unreliable outputs. AI works best with structured inputs and clear, precise prompts, which help produce consistent and actionable results. Maintaining high-quality training data and effectively integrating tools requires continuous effort. Striking the right balance between automation and human review is essential to ensure the system remains accurate and trustworthy.

How can small teams use AI to improve incident management?

AI-driven incident management enables smaller teams to operate more effectively by cutting down on unnecessary alerts, automating repetitive processes like triage and remediation, and learning from previous incidents to address problems more quickly. This frees up engineers to concentrate on critical, high-impact tasks rather than being stuck handling routine work.

With AI in the mix, small teams can respond faster, minimize downtime, and enhance system reliability – all without needing to expand their team. It’s an efficient approach to maintaining top-notch operations while optimizing resources.