How to Manage Kubernetes Cost, Complexity, and Scale

As Kubernetes cements its position as the de facto platform for container orchestration, organizations are facing the inevitable challenges of managing cost, complexity, and scaling their workloads. In a recent discussion among Kubernetes experts, the state of Kubernetes in 2025 was analyzed in depth, touching on adoption trends, operational hurdles, AI integration, platform engineering, and future predictions.

This article distills insights from that conversation, providing actionable analysis and guidance for IT professionals, DevOps engineers, and system administrators looking to optimize Kubernetes operations while navigating the complexities of modern cloud-native environments.

The Expanding Adoption and Maturation of Kubernetes

A Decade of Growth and Standardization

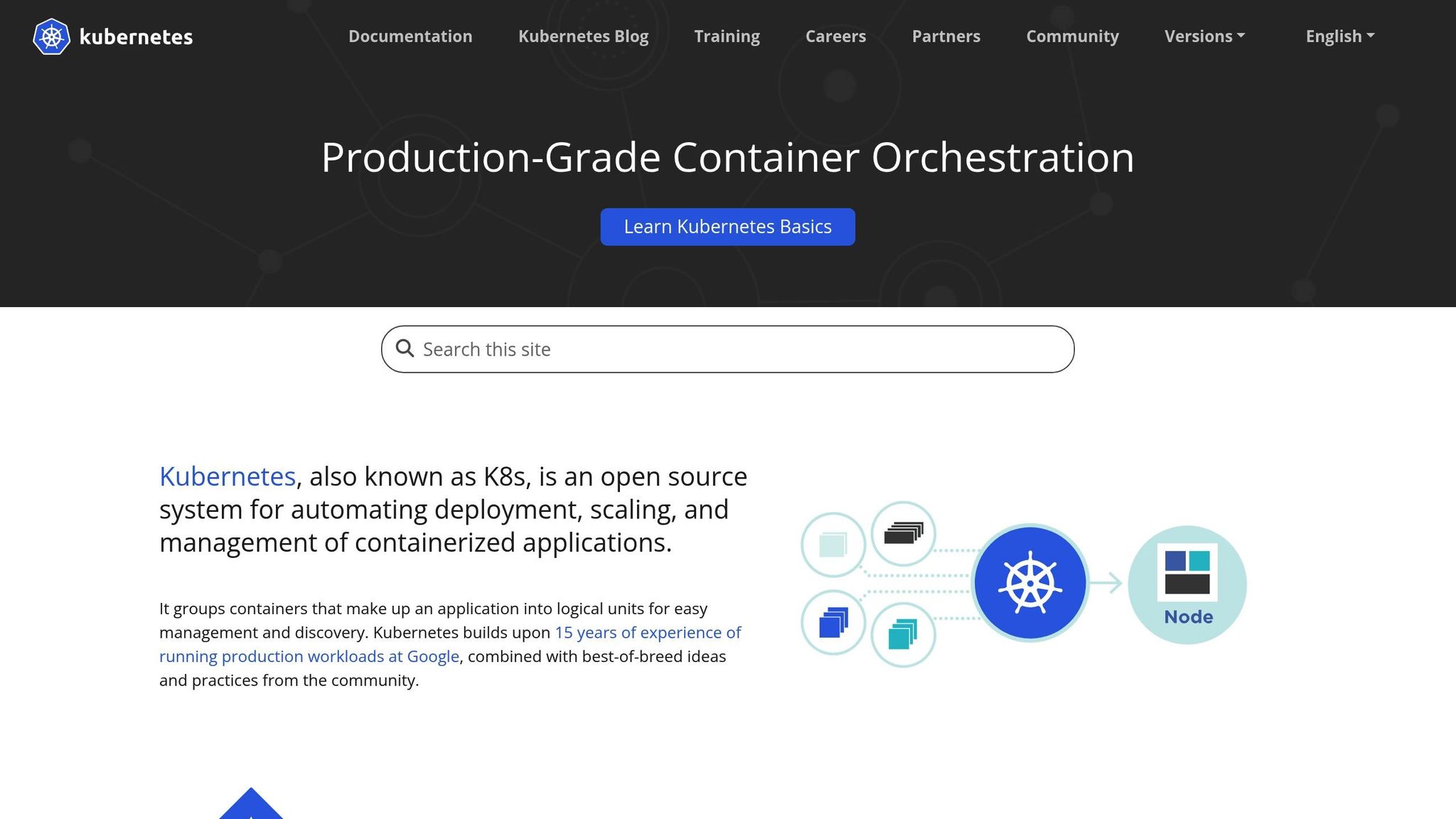

As Kubernetes turns 10, its adoption has reached a new level of maturity. According to the latest data, 65% of organizations have been using Kubernetes for more than five years, signaling that the technology has transitioned from an experimental platform to a cornerstone of enterprise infrastructure. It has become the default choice for container orchestration, often overshadowing simpler alternatives like Docker standalone or even serverless options.

What makes Kubernetes so resilient? The ecosystem surrounding it has grown exponentially, with a vast array of tools, patterns, and best practices that simplify adoption. Managed Kubernetes services like AWS EKS, Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS) have also reduced the complexity of spinning up and managing clusters. As one expert put it:

"Kubernetes is no longer a choice – it’s the default. Even for small workloads, the advantages of Kubernetes far outweigh running standalone Docker."

Clusters at Scale: How Many is Too Many?

The conversation revealed an increasingly common trend: organizations are managing large-scale deployments with more than 20 clusters spread across clouds, data centers, and edge locations. This multicluster approach offers redundancy, resilience, and regional differentiation. However, it also introduces operational complexity. The question arises: Should every team have a separate cluster, or should organizations consolidate workloads into fewer, larger clusters?

Experts agreed that the approach depends on:

- Workload Isolation Needs: Sensitive applications may benefit from isolated clusters.

- Team Autonomy: Giving teams control over their clusters can reduce bottlenecks but increase fragmentation.

- Operational Overhead: Managing hundreds of clusters requires robust automation and monitoring.

- Risk Management: A single cluster failure can be catastrophic for monolithic architectures.

The consensus? There’s no one-size-fits-all solution. Organizations must weigh their risk profiles, operational capabilities, and future growth trajectories before choosing between a multicluster or consolidated approach.

AI: A Double-Edged Sword in Kubernetes Management

AI Workloads on the Rise

Artificial Intelligence (AI) is quickly becoming a core application area for Kubernetes. According to the report, 90% of enterprises expect their AI workloads to grow within their Kubernetes environments. AI thrives in Kubernetes because the platform supports GPU acceleration, resource scaling, and workload portability.

However, AI workloads bring unique challenges, such as high costs for GPU-based infrastructure and unpredictable resource requirements. This has led to a contradictory trend: while AI proliferates, organizations are also turning to AI-powered tools to optimize Kubernetes costs. Some 92% of respondents said they are leveraging or planning to leverage AI for cost management.

Can AI Manage Kubernetes Effectively?

The potential for AI extends beyond optimizing costs. There is growing interest in AI-managed control loops for Kubernetes clusters. Imagine an operator that automatically scales, distributes workloads, or mitigates failures based on predictive analytics and real-time telemetry. While promising, experts were skeptical about its readiness:

"Non-determinism in AI gives me pause. We need to trust these systems to make critical decisions about availability and performance. We’re not there yet."

For now, AI may be better suited to provide insights and recommendations rather than take direct control. Examples include identifying underutilized resources, spotting seasonal traffic trends, or flagging configuration drift.

The Role of Platform Engineering: Hype vs. Reality

The Rise of Platform Teams

Platform engineering has emerged as a key area of interest for organizations looking to tame Kubernetes complexity. However, the conversation revealed a sobering reality: while 80% of organizations claim to have a mature platform engineering team, over half admitted their clusters are still snowflakes with manual operations.

This disconnect suggests many organizations are mistaking tooling (e.g., deploying Backstage, ArgoCD, or Flux) for maturity. True platform engineering requires more than technology – it demands cultural change, robust automation, and clear accountability.

"Many companies think deploying a platform tool equals maturity. But a mature platform engineering team is a culture, not a product."

Centralization vs. Decentralization

Another tension lies between centralization and autonomy. Should platform teams create rigid guardrails, or should they empower teams to innovate? The answer may vary:

- Centralization works well for large organizations with strict compliance needs or complex architectures.

- Decentralization thrives in smaller, agile teams that benefit from autonomy.

Ultimately, platform engineering must balance these forces, ensuring the platform provides guardrails while still supporting innovation.

Legacy Migration: Modernizing Workloads

Despite Kubernetes’ maturity, legacy workloads remain a hurdle. Many organizations are turning to solutions like Kubernetes’s KubeVirt to run Virtual Machines (VMs) alongside containers. This approach allows them to modernize incrementally by taking advantage of Kubernetes tooling like service discovery, secrets management, and observability.

The experts noted that while running VMs in Kubernetes is gaining traction, it’s not a panacea. Legacy workloads often come with technical debt that can’t always be solved by containerization or Kubernetes. However, for organizations with hybrid environments or hard-to-migrate applications, the ability to run VMs in Kubernetes offers a valuable bridge.

Predictions for Kubernetes’ Future

Based on current trends, several predictions were made for Kubernetes’ trajectory in the next 3-5 years:

- AI in Kubernetes: One in three enterprises may integrate AI co-pilots for Kubernetes management, but adoption will likely be experimental and uneven.

- KubeVirt Adoption: 50% of clusters may eventually host VM workloads, bridging the gap between traditional and cloud-native applications.

- Proliferation of Clusters: 30% of enterprises could operate over 100 clusters, driven by edge computing and multicloud strategies.

While these predictions are optimistic, they rest on the assumption that organizations can overcome the operational and cultural challenges discussed earlier.

Key Takeaways

- Kubernetes Is the Default: Kubernetes’ widespread adoption reflects its maturity. Even small workloads benefit from its standardization and ecosystem.

- AI Is a Growing Force: While AI workloads are growing within Kubernetes, AI-driven management remains experimental.

- Platform Engineering Needs Maturity: True platform engineering requires cultural and operational maturity, not just tools like Backstage or ArgoCD.

- Legacy and Modern Workloads Can Coexist: Tools like KubeVirt allow organizations to bridge the gap between VMs and containers.

- Operational Complexity Must Be Tamed: Scaling to hundreds of clusters or adopting multicloud strategies demands robust automation and monitoring.

- Edge Computing Is Expanding: Kubernetes is becoming a key enabler of edge deployments, but the definition of "edge" remains fluid.

- Stay Iterative: Platforms, like any technology, require constant evolution. Don’t assume your current Kubernetes implementation is the final destination.

Conclusion

Kubernetes has come a long way in its first decade, evolving from a groundbreaking open-source project to an enterprise mainstay. But its maturity brings new challenges: cost management, operational complexity, and legacy integration. As the Kubernetes ecosystem continues to grow, organizations must focus on cultural change, robust automation, and thoughtful architecture to stay ahead.

Whether you’re scaling to hundreds of clusters or exploring AI-driven optimizations, the key to Kubernetes success lies in balancing innovation with operational discipline. While the platform may not be a silver bullet, its flexibility ensures there’s a path forward for almost every workload.

For IT executives, DevOps engineers, and system architects, now is the time to evaluate your Kubernetes strategy. Are you keeping pace with the technology? More importantly, are you using it to drive measurable business outcomes?

The Kubernetes journey is far from over – let’s see where the next decade takes us.

Source: "A Decade of Kubernetes: Cost, Code, and Complexity" – Rawkode Academy, YouTube, Aug 22, 2025 – https://www.youtube.com/watch?v=cF_BnevjhPU

Use: Embedded for reference. Brief quotes used for commentary/review.