Kubernetes on Edge for IoT Workloads

Managing IoT workloads at the edge is no small feat. With 75% of enterprise data projected to be processed at the edge by 2025, organizations need efficient, scalable solutions to handle thousands of devices across diverse environments. Kubernetes, especially lightweight versions like K3s and KubeEdge, offers a way to simplify edge management, automate deployments, and ensure reliability even in challenging conditions.

Key Takeaways:

- Challenges: Limited bandwidth, hardware diversity, and security risks make edge management complex.

- Kubernetes Advantages: Automates scaling, self-healing, and configuration management. Lightweight distributions like K3s and KubeEdge operate on resource-constrained devices.

- Design Patterns: Use hub-and-spoke models for centralized management or 3-tier IoT architectures for real-time processing at the edge.

- Security: Zero-trust models, encrypted communication, and RBAC are critical for securing edge devices.

Kubernetes transforms edge environments by enabling automation, consistency, and offline operation, making it a critical tool for IoT deployments.

Main Challenges in Managing IoT Workloads

Managing Complexity at Scale

Shifting from a few centralized servers to thousands of edge nodes spread across diverse locations brings a whole new level of operational complexity. Each site must maintain consistent configurations, synchronized software versions, and standardized security policies. But here’s the catch: manual management simply can’t keep up. Managing 1,000 clusters is an entirely different beast compared to handling just one.

Without automation, configuration drift becomes a major headache. One gateway might be running version 2.3 of your application, another version 2.1, and a third might have custom patches applied by a field technician. The variety of hardware – ranging from ARM processors to x86 chips and specialized peripherals – only adds to the challenge, making it even harder to maintain control over the entire fleet.

To make matters worse, most edge locations don’t have on-site IT support. Think about a retail store or a warehouse – there’s usually no one available to troubleshoot a failed deployment by logging into a gateway. That’s why devices need to support automated provisioning. Ideally, they should arrive pre-configured to set themselves up automatically. Without this, hardware often ends up unused because manual installation is just too complex.

These operational challenges naturally lead into another critical area: the limitations of network connectivity in edge environments.

Network Limits and Local Data Processing

Edge environments often operate in network conditions that would cripple traditional cloud-based systems. Connectivity can be spotty – imagine offshore oil rigs, moving trucks, or underground mines. Latency and bandwidth constraints make constant communication with the cloud impractical.

Some workloads, like autonomous robots or industrial safety systems, need responses in milliseconds. Sending data to the cloud and waiting for a reply introduces delays that just aren’t acceptable. Streaming raw video or high-frequency sensor data to the cloud isn’t much better – it can quickly overwhelm network bandwidth and rack up egress costs. On top of that, standard Kubernetes assumes consistent connectivity; if latency exceeds the node-status-update-frequency, the control plane might mistakenly flag remote nodes as unhealthy.

"Edge systems keep working when connectivity falters." – WWT Research

To address this, edge workloads need to operate autonomously. They must cache state locally so they can keep functioning even when the network goes down, syncing back to the cloud once connectivity is restored. This need for offline autonomy fundamentally changes how applications are designed and deployed.

Limited Resources and Security Concerns

Another challenge? Resources. Standard Kubernetes distributions require approximately 1 GB of memory. But many edge gateways only have 128 MB to 256 MB of RAM. This means traditional orchestration tools can hog resources that IoT applications desperately need. Lightweight alternatives are crucial to managing these resource-constrained environments effectively.

Security is another major concern. Unlike data center servers, which are housed in secure, climate-controlled rooms, edge devices are often located in less controlled environments – on factory floors, in retail storage areas, or even outdoors. Physical access to these devices is much easier, increasing the risk of tampering, theft, or damage. When these devices connect to public networks, the lack of a secure network perimeter further expands the threat surface.

| Challenge Category | Specific Issue | Impact on Operations |

|---|---|---|

| Scalability | Fleet Management | Managing 1,000 clusters adds operational complexity |

| Connectivity | Intermittent Network | Remote nodes may be flagged as "unhealthy" due to latency issues |

| Resources | Memory Overhead | Standard Kubernetes consumes resources needed for IoT applications |

| Environment | Physical Access | Increased risk of tampering, theft, or damage in uncontrolled settings |

Traditional security approaches, which rely on network perimeters and centralized monitoring, fall short in these scenarios. Instead, security must be built directly into the devices. This includes hardware-based roots of trust, full disk encryption, and the ability to remotely disable physical ports when a threat is detected.

These challenges highlight the unique demands of edge environments, setting the stage for solutions tailored specifically to Kubernetes in managing IoT workloads.

Kubernetes-Native Edge and IoT Solutions: Open Source k0s and k0smotron by Mirantis

How Kubernetes Solves Edge Problems

Lightweight Kubernetes Distributions Comparison for Edge IoT Deployments

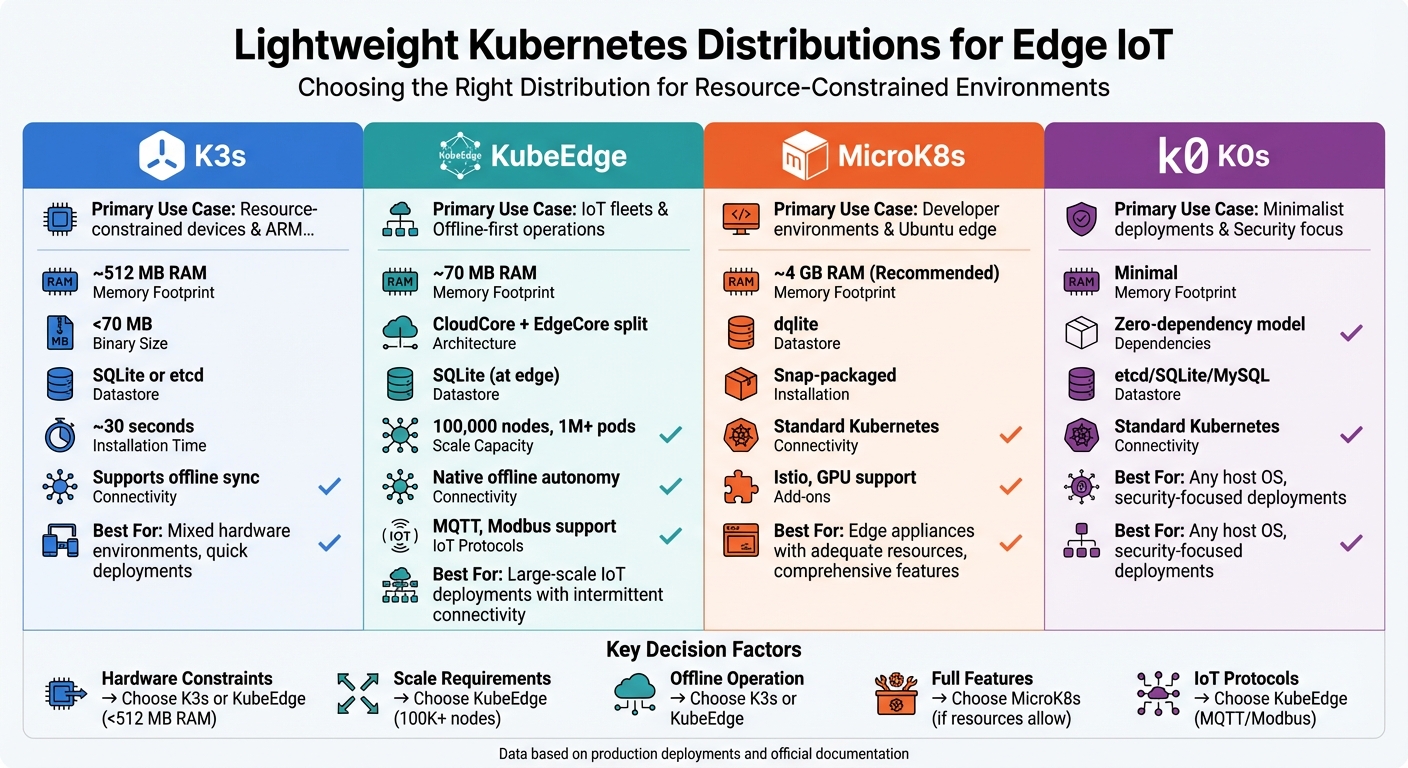

Lightweight Kubernetes Options

Standard Kubernetes often surpasses the hardware limits of edge devices, but lightweight distributions address this issue effectively. Take K3s, for instance – it streamlines Kubernetes into a single binary under 70 MB. It can operate on devices with as little as 512 MB of RAM and a single CPU core. Installation is quick, usually taking around 30 seconds, and it uses SQLite as the default datastore instead of the more memory-intensive etcd.

KubeEdge takes a different route by splitting its architecture into CloudCore and EdgeCore components. This design allows it to scale up to 100,000 nodes and over a million pods per control plane. It also supports IoT protocols like MQTT and Modbus, making it ideal for connecting sensors and actuators that don’t rely on HTTP.

Meanwhile, MicroK8s offers a snap-packaged distribution with optional add-ons for services like Istio and GPU support. K0s, on the other hand, focuses on a zero-dependency model that can run on any host operating system. Each of these distributions tackles edge resource constraints differently, enabling Kubernetes to function on hardware not originally designed for full-scale orchestration.

| Feature | K3s | KubeEdge | MicroK8s | K0s |

|---|---|---|---|---|

| Primary Use Case | Resource-constrained/ARM | IoT fleets/Offline use | Developer/Ubuntu edge | Minimalist/Security focus |

| Memory Footprint | ~512 MB RAM | ~70 MB RAM | ~4 GB (Recommended) | Minimal |

| Datastore | SQLite or etcd | SQLite (at edge) | dqlite | etcd/SQLite/MySQL |

| Connectivity | Supports offline sync | Native offline autonomy | Standard Kubernetes | Standard Kubernetes |

These lightweight distributions make it easier to scale and manage edge devices by adopting a declarative model and automating key tasks like scaling and self-healing.

Declarative Configuration and Auto-Scaling

Kubernetes simplifies edge management with its declarative approach. In this model, you define the desired state – like the number of replicas, resource limits, or container images – using a YAML manifest. Kubernetes then takes care of the steps to achieve and maintain that state.

"Declarative orchestration… allows you to describe the desired state of your application and infrastructure, rather than the specific steps required to achieve that state."

Edge-optimized setups ensure that local agents enforce the declared state, even if the network connection is interrupted.

Scaling is as simple as updating the replica count in your manifest. Kubernetes automatically schedules the new containers across available edge resources. It continuously monitors the system, comparing the actual state to the desired one, and reschedules or restarts containers as needed. This self-healing behavior is crucial in edge environments where on-site IT support is often unavailable. By adjusting workloads automatically, Kubernetes minimizes latency and resource challenges common in edge setups.

GitOps integration enhances this process by storing configurations as code in Git. This enables automated rollouts, effortless rollbacks, and a clear audit trail across IoT deployments. As Justin Barksdale, Principal Architect at Spectro Cloud, explains:

"Cluster Profiles provide the declarative (desired) state of what our Kubernetes cluster should look like… and define, to an agent deployed in the cluster, what the cluster should look like. The agent enforces that declared state, even if connectivity to the control plane is lost."

Security and Access Control

Deploying Kubernetes at the edge often means working with devices in public or unmonitored locations, making security a top concern. A zero-trust model is essential, assuming devices are compromised from the start. ZEDEDA highlights this with its zero-trust architecture:

"ZEDEDA’s state-of-the-art and market-leading Zero Trust security architecture assumes that edge nodes distributed in the field are physically accessible, in addition to not having a defined network perimeter."

Role-Based Access Control (RBAC) centralizes permission management for thousands of devices, while cryptographic identifiers replace local logins, reducing the risk of unauthorized access. NetworkPolicies restrict pod-to-pod communication to the bare minimum, and distributed firewalls enforce strict routing rules.

K3s enhances security with automated TLS and a reduced footprint, while also allowing the disabling of unnecessary I/O ports, lowering vulnerability risks. Advanced edge operating systems add another layer with volume encryption and TPM-locked decryption keys. These keys are released only after verifying the software state through remote attestation.

For environments behind firewalls or in air-gapped setups, unidirectional network tunneling simplifies connectivity. In this model, edge nodes initiate outbound connections, allowing the control plane to manage clusters without requiring bidirectional communication. This egress-only approach makes firewall configurations easier and strengthens security. Together, these measures provide the confidence needed to deploy Kubernetes on edge devices, even for critical IoT applications.

sbb-itb-f9e5962

Design Patterns for Kubernetes on Edge

Kubernetes has become a game-changer for managing edge environments, and applying specific design patterns can take deployment efficiency to the next level.

Hub-and-Spoke Setup

The hub-and-spoke model uses a centralized hub to manage a network of edge clusters – referred to as spokes. This setup provides a single dashboard for overseeing operations while allowing each edge site to function independently in case of network disruptions.

In this architecture, the hub stores configurations as code in Git and sends updates to the spokes. Each edge site has agents that pull these updates and enforce them locally, ensuring that workloads run smoothly even when connectivity is spotty. This approach operates on an eventual consistency basis, meaning updates sync when the connection is restored. Because spokes use outbound-only connections, firewall configurations are simpler to manage. A single cluster profile can be defined and then used to deploy updates programmatically across all sites.

"Managing a fleet of hundreds or thousands of clusters is far more complex than managing a single cluster." – WWT Research

A cost-conscious variation of this model involves using remote worker nodes. Instead of deploying a full Kubernetes control plane at every edge site, the control plane remains centralized, and only worker nodes are placed at the edge. This reduces hardware costs but requires a stable, low-latency network. If network latency exceeds Kubernetes’ node-status-update-frequency, the central control plane might flag edge nodes as unreachable.

This centralized approach can also scale to broader architectures, where Kubernetes manages operations across interconnected device, edge, and cloud layers.

3-Tier IoT Architecture

The three-tier IoT architecture – comprising device, edge, and cloud layers – allows Kubernetes to integrate real-time local processing with centralized computing resources. The device tier includes sensors, cameras, and other endpoint devices. The edge tier hosts Kubernetes clusters to handle tasks like machine learning inference, data aggregation, and MQTT message brokering. Meanwhile, the cloud tier focuses on compute-heavy tasks, such as AI model training and managing operations across the entire network.

At the edge, Kubernetes serves as a protocol adapter. Tools like Akri can register physical devices (e.g., cameras and sensors) as Kubernetes-native resources, enabling the deployment of workloads that process data on-site. For example, an edge node might run a containerized inference engine to analyze video streams, sending only alerts or metadata to the cloud.

The demand for hybrid AI models is growing rapidly, with deep learning expected to play a role in over 65% of edge use cases by 2027, up from less than 10% in 2021. These models often involve training in the cloud and deploying lightweight inference containers at the edge. Kubernetes supports diverse hardware setups, including x86 servers and ARM-based gateways, using multi-architecture container images. By leveraging node labels and taints, Kubernetes ensures that tasks requiring specific hardware, such as GPUs, are assigned to the appropriate nodes.

These design patterns set the stage for deploying Kubernetes on the edge in a cost-effective way.

Cost-Saving Deployment Methods

Running Kubernetes on bare metal – without a hypervisor – can cut down on both virtualization overhead and licensing costs. For example, retail environments can deploy bare-metal clusters with built-in load balancing and Git-synced configurations to maintain operations even during network outages.

For smaller teams or limited resources, a single-node cluster or a compact three-node high-availability setup can work well. In the latter, each node serves as both a control plane and a worker, reducing hardware needs. Microsoft’s AKS Edge Essentials is another option, requiring just 4 GB of RAM and 2 vCPUs. This lets industrial operators run Linux workloads alongside Windows HMIs on existing hardware, avoiding the need for dedicated Linux servers.

"The remote worker approach eliminates the overhead of having a dedicated control plane at each location." – Red Hat Blog

Optimizing container images is another way to save costs. Using multi-stage Docker builds and lightweight base images like Alpine can shrink image sizes, reducing bandwidth usage and speeding up updates across multiple sites. Applying ResourceQuota and limits ensures that edge applications don’t overuse CPU and memory resources. For long-term stability, Microsoft offers a 10-year Long-Term Servicing Channel for the host OS in certain edge setups, minimizing the need for frequent updates and associated costs.

Steps to Implement Kubernetes on Edge

Transitioning from theoretical designs to actual deployment of Kubernetes at the edge requires a clear and methodical approach. Start by analyzing your workload environment, choosing tools that align with your specific needs, and constructing an architecture that balances scalability with cost-efficiency.

Evaluate Your Workloads and Constraints

Begin by assessing the hardware requirements of each workload. For instance, K3s can run on devices with as little as 512 MB of RAM, while MicroK8s demands around 4 GB of RAM per node. If you’re working with devices like Raspberry Pi gateways or industrial controllers, ensure the Kubernetes distribution supports the necessary architecture, such as ARMv7 or ARM64.

Connectivity is another key factor. Both K3s and KubeEdge support offline operations, which can be crucial for edge deployments. Workloads requiring real-time responses or localized data processing should remain at the edge to minimize latency.

Before committing to a specific Kubernetes distribution, test your StatefulSet or DaemonSet workloads on the target hardware. Track metrics such as cold-start times, CPU usage, and storage latency. Simulate potential failures – like disconnecting network cables or shutting down nodes – to evaluate system recovery. Also, deploy monitoring tools such as Prometheus or OpenTelemetry from the outset to ensure your edge services meet expected performance levels.

Once you’ve mapped out your workload constraints, you can move on to selecting the tools that best meet your needs.

Choose the Right Kubernetes Tools

After understanding your workload requirements, select a Kubernetes distribution that fits your constraints. For lightweight deployments, K3s is a great option. Packaged as a single binary under 70 MB, it can bring a node online in about 30 seconds. Designed for mixed hardware environments, especially ARM-based devices, K3s uses SQLite for single-node setups instead of etcd, reducing resource demands.

KubeEdge offers a cloud-edge split architecture, with CloudCore running in the cloud and EdgeCore on edge devices. It’s tailored for IoT scenarios, supporting offline-first operations and direct hardware interactions through protocols like MQTT and Modbus. KubeEdge can scale to 100,000 nodes and over a million pods per control plane, while operating on as little as 70 MB of memory. This makes it ideal for devices that need to function during prolonged WAN outages.

For edge setups with fewer hardware limitations, MicroK8s provides a comprehensive experience with snap-based installation and built-in add-ons for GPUs and service meshes. Requiring around 4 GB of RAM for a single node, it’s well-suited for edge appliances or small on-premises clusters.

Plan and Optimize Edge Layouts

Once you’ve chosen your tools, decide whether to extend a central cloud control plane to your edge nodes or deploy independent clusters at each site. For offline-first setups, design systems that use local metadata caching and protocols like MQTT to ensure workloads can operate seamlessly during WAN outages. Simplify connectivity by using agent-based pull architectures with secure, egress-only connections to a central management plane, avoiding the need for VPNs or open inbound firewall ports.

To prevent resource bottlenecks, use Kubernetes features like node labels, taints, tolerations, ResourceQuotas, and LimitRanges. For example, allocate AI inference tasks requiring GPUs to specific nodes equipped with the appropriate hardware.

Maintain consistency across geographically distributed nodes by adopting a GitOps workflow, which helps prevent configuration drift. Optimize container images by using multi-stage Docker builds and slim base images like Alpine, which reduces bandwidth usage during updates over unreliable networks. Finally, centralize observability by aggregating telemetry and logs from all clusters with tools like Fluentd and Prometheus – manual monitoring on a per-node basis becomes unmanageable at scale.

Conclusion

Kubernetes has transformed IoT edge management from a labor-intensive, device-by-device challenge into an automated, self-healing system that scales effortlessly and supports zero-touch provisioning across entire fleets. With projections showing that 75% of enterprise-generated data will be processed at the edge by 2025, adopting Kubernetes now provides a unified orchestration layer capable of managing 100,000 geographically dispersed devices as a single, integrated system. This is a far cry from the operational headaches and configuration drift that often plague traditional edge management methods.

This shift isn’t just about adopting new technology – it’s about achieving operational efficiency. By leveraging GitOps workflows and centralized control planes, Kubernetes ensures consistency across all nodes without requiring constant human oversight. Lightweight distributions like K3s and KubeEdge, which can operate on just 70 MB of memory, demonstrate their effectiveness in production settings, making even resource-limited IoT gateways suitable for cloud-native orchestration.

"Managing a few servers is straightforward; managing thousands of distributed edge devices is not." – Plural

For a successful deployment, preparation is key. Start by evaluating your hardware limitations, connectivity patterns, and security needs. Determine whether your workloads demand real-time processing or can function with occasional cloud synchronization. Test your chosen Kubernetes distribution on actual hardware, simulate potential failures, measure cold-start times, and ensure monitoring systems are operational from the outset. These steps can help you avoid common pitfalls and set the stage for a smooth rollout.

If your organization lacks in-depth Kubernetes expertise or operates at scale, collaborating with experienced practitioners can make all the difference. The right guidance can streamline deployment, from selecting the best distribution for your constraints to designing a network architecture that handles intermittent connectivity and optimizes control plane placement for low latency. At TechVZero, we’ve managed deployments at scales exceeding 99,000 nodes and have honed strategies that work in real-world scenarios.

FAQs

How do lightweight Kubernetes options like K3s and KubeEdge handle resource constraints on edge devices?

Lightweight Kubernetes distributions like K3s and KubeEdge are specifically crafted to run efficiently on edge and IoT devices, which often have limited CPU, memory, and storage. K3s takes a streamlined approach by consolidating the Kubernetes control plane into a single binary that’s under 70 MB. It also swaps out the default etcd datastore for an embedded SQLite database, cutting down on resource demands. By removing unnecessary dependencies, K3s becomes a perfect match for low-power devices like the Raspberry Pi, all while maintaining a production-ready Kubernetes environment.

KubeEdge takes a different route with its cloud-edge split design. The full Kubernetes control plane remains in the cloud, while a lightweight agent is deployed on edge devices. This agent handles container management and device connectivity locally, reducing the processing burden on the edge device and cutting down bandwidth usage by processing data closer to its source. Even in cases of unstable network connections, KubeEdge ensures smooth synchronization and reliable performance, making it an excellent choice for IoT workloads.

At TechVZero, we see these solutions as game-changers for building scalable IoT applications. They allow teams to innovate without the need for heavy hardware investments, shifting the focus from infrastructure headaches to creating impactful solutions.

What are the best security practices for running Kubernetes on edge devices?

Running Kubernetes on edge devices comes with its own set of challenges, like limited resources, geographical spread, and physical security risks. To tackle these, you need security practices designed specifically for edge environments.

Start with the control plane. Make sure API communication is encrypted using TLS, and protect sensitive data with encryption at rest. Use strict access controls with role-based access control (RBAC) and strong authentication methods, such as short-lived certificates or external OIDC providers, to limit who and what can interact with your system.

At the workload level, focus on secure container configurations. Run containers as non-root users, disable privilege escalation, and drop any unnecessary Linux capabilities. Enforcing a readOnlyRootFilesystem adds an extra layer of protection. Tools like SELinux, AppArmor, or seccomp profiles can further lock down your workloads. Applying Pod Security Standards or custom RuntimeClasses helps maintain consistent container isolation across all your edge nodes.

Finally, ensure secure provisioning processes for your edge devices. Devices should be onboarded with signed, immutable images and verified identities to minimize risks during initialization. This approach helps create a secure foundation from the start.

By weaving these practices into your strategy, you can leverage Kubernetes’ scalability at the edge while keeping security tight – without needing advanced infrastructure expertise.

How does Kubernetes maintain reliability and handle offline operation in edge environments with unstable connectivity?

Kubernetes brings reliability to edge environments through its self-healing features. The kubelet plays a key role by monitoring container health and restarting any failed containers based on the pod’s restartPolicy. If a node becomes unavailable, Kubernetes steps in to reassign workloads to other nodes, keeping disruptions to a minimum. For stateful services, PersistentVolumes ensure data continuity by re-attaching to new pods as needed.

In cases where edge devices need to operate completely offline, tools like KubeEdge come into play. These tools extend Kubernetes by adding local agents and message hubs that store data and container images locally. This setup allows applications to function independently of the cloud and synchronize seamlessly once connectivity is restored. By combining Kubernetes’ robust design with edge-specific tools, it’s possible to create a dependable, low-maintenance solution for IoT workloads, even in environments where connectivity is unreliable.